|

I am a first-year Ph.D. student at the University of Amsterdam (UvA) in the VIS Lab, supervised by Prof. Dr. Cees Snoek and Yingjun Du. My research focuses on multimodal foundation models as part of the Horizon Europe ELLIOT project. I received my M.Sc. degree from the University of Science and Technology of China (USTC). After graduation, I worked as a research assistant at Westlake University with Prof. Yefeng Zheng. I also received valuable guidance from Dr. Shiwei Liu, Dr. Xiantong Zhen, and Dr. Gaowen Liu. Feel free to contact me for research collaborations. Email / CV / Google Scholar / Twitter / Github |

|

|

[Nov 2025] One paper was accepted by WACV 2026. [Sep 2025] One paper was accepted by NeurIPS 2025. [Sep 2024] One paper was accepted by NeurIPS 2024. [Apr 2024] One paper was accepted by CVPR 2024 Workshop. [Apr 2023] One paper was accepted by ICML 2023. |

|

|

|

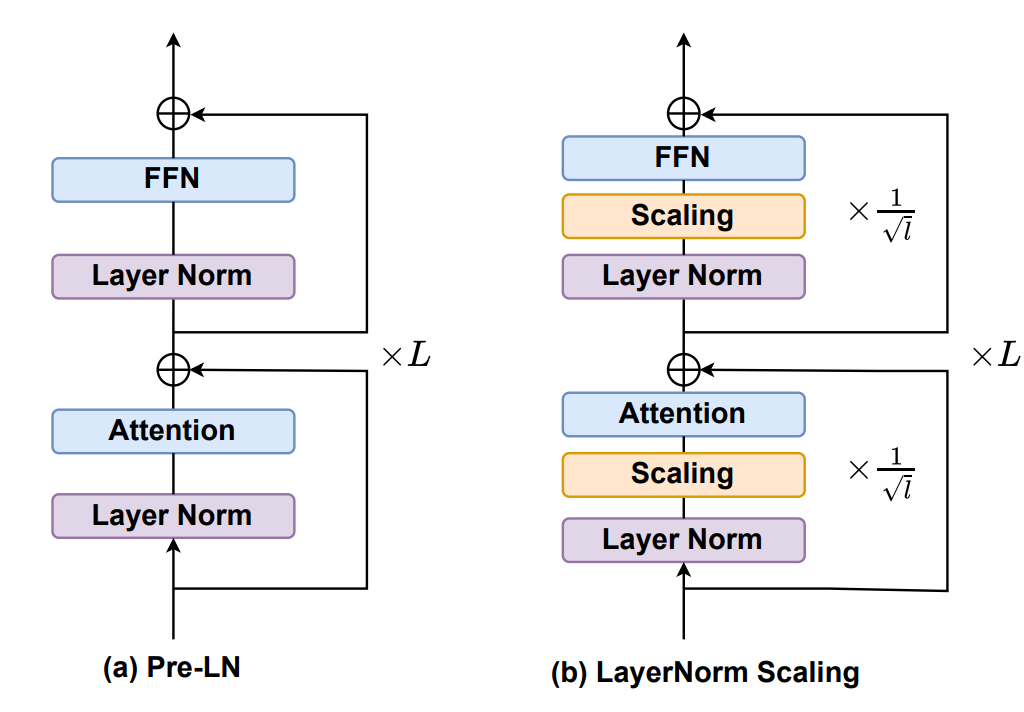

Wenfang Sun*, Xinyuan Song*, Pengxiang Li*, Lu Yin, Yefeng Zheng, Shiwei Liu NeurIPS, 2025 paper / code We propose LayerNorm Scaling, a simple yet effective modification that mitigates the variance explosion in deep Transformer layers, enabling Large Language Models to fully leverage their depth and achieve consistently better pre-training and fine-tuning performance. |

|

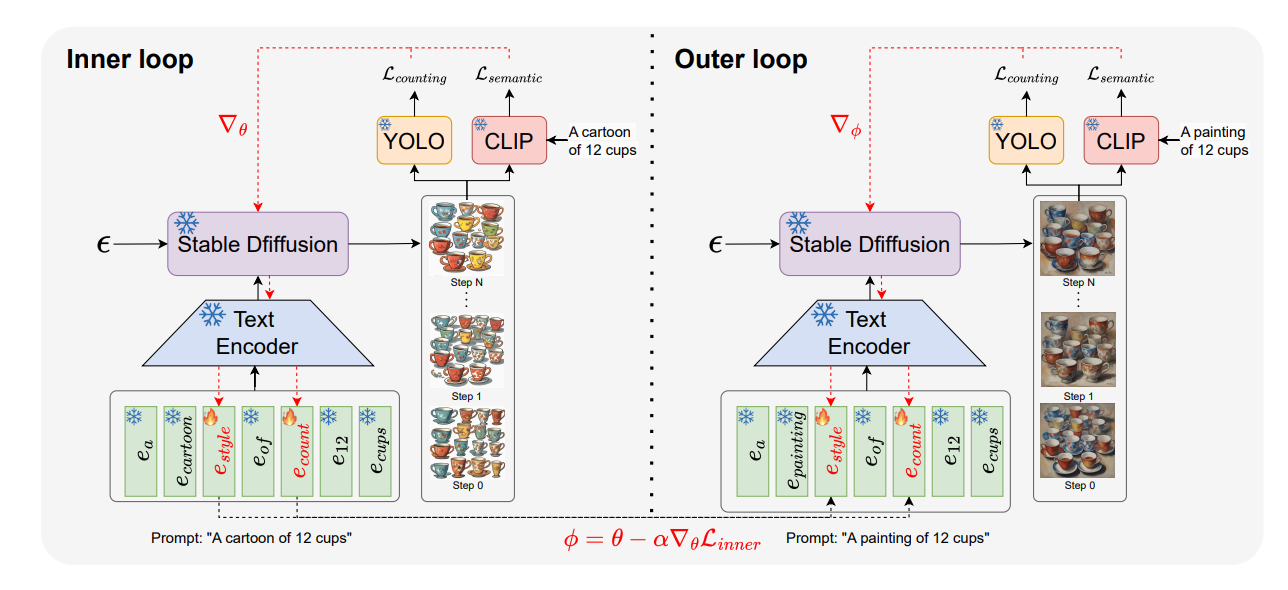

Wenfang Sun*, Yingjun Du*, Gaowen Liu, Yefeng Zheng, Cees G. M. Snoek WACV, 2026 paper We propose QUOTA, a domain-agnostic optimization framework for text-to-image models that enables accurate object quantification across unseen domains without retraining, by combining dual-loop meta-learning with prompt, counting, and domain tokens to achieve superior accuracy and adaptability. |

|

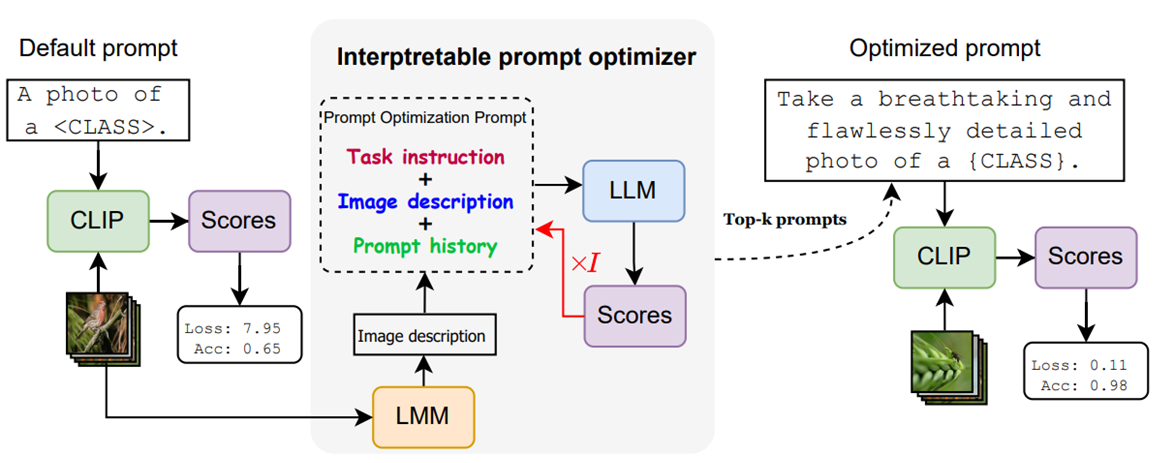

Wenfang Sun*, Yingjun Du*, Cees Snoek NeurIPS , 2024 paper / code We propose IPO, an interpretable prompt optimizer that uses LLMs to dynamically generate and refine prompts, while incorporating an LMM to enhance textual-visual interaction. |

|

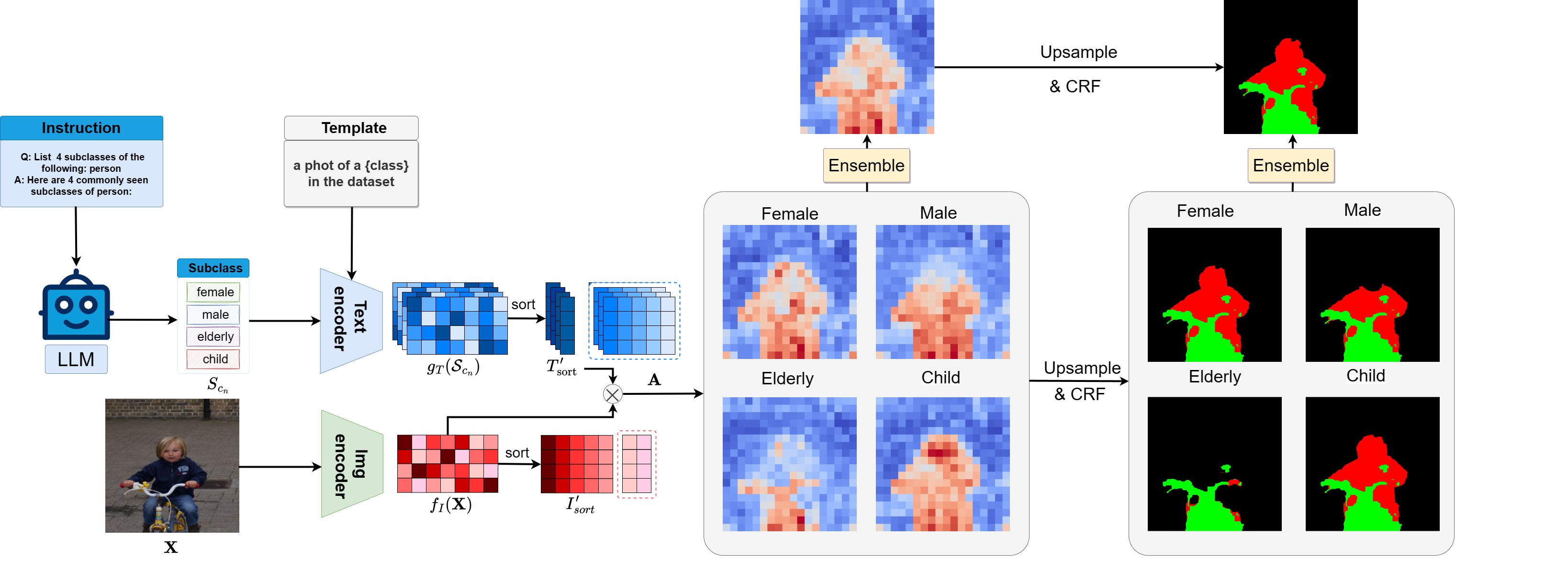

Wenfang Sun*, Yingjun Du*, Gaowen Liu, Ramana Rao Kompella, Cees Snoek CVPR Workshop, 2024 paper We propose a novel text-supervised semantic segmentation framework that leverages large language model supervision for enhanced class descriptors, refined subclass generation, and effective ensembling for improved segmentation accuracy. |

|

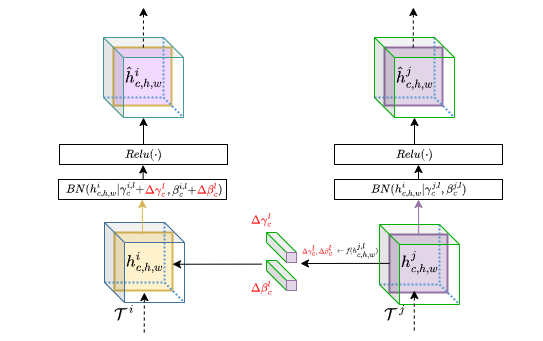

Wenfang Sun*, Yingjun Du*, Xiantong Zhen, Fan Wang, Ling Wang, Cees Snoek ICML, 2023 paper / code We propose a method for few-shot learning with fewer tasks, which we call MetaModulation. |

|

This website is based on Jon's source code. |